What is aerial imagery and how can it be interpreted with AI?

Airborne systems mounted with sensing devices are able to acquire digital observations of our planet at low cost, on wide, possibly hardly accessible (e.g. mountainous environments) areas. Technological advances led to lighter, smaller systems providing finer observations. In this project, the aerial imagery is acquired through UAV and consists in color images, multispectral images (covering also the invisible spectrum such as infrared wavelengths), and 3D point clouds.

- Visible images (visible red, green and blue) over Bocard area

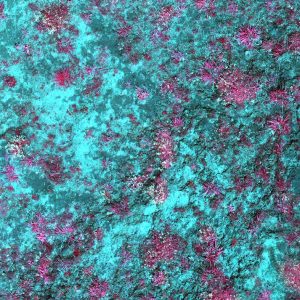

- Multispectral image (false color composition with infra red) over Bocard area

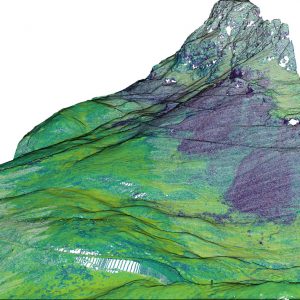

- LiDAR elevation map over Chichoue area

- 3D LiDAR point cloud over Chichoue area

The Increasing availability of massive amount of remotely-sensed data also comes with a cost: the need for automated method to analyze such data, and to generate some meaningful knowledge out of it. Recently, such a need has been mostly filled thanks to Artificial Intelligence techniques, which have been shown successful in a wide range of fields. When applied to digital images, such techniques can help for instance recognizing a scene and the objects it includes. Still, their application to ecological data remains low, possibly due to the numerous scientific challenges it raises. In this project, the AI techniques will help to automatically assess plant spatial distribution.

Objectives of this research axis:

The objective is to combine airborne data and in-situ species characterization in a deep learning framework to ensure species mapping. We assume that using such multiple sources in a multi-task, deep neural network should allow to derive high-resolution information beyond the standard land cover mapping achieved with semantic segmentation networks (that usually fail to extract precise object contours). It will enable the analysis of the emerging pattern of multiple plant interactions at the community scale, and illustrate the potential of Artificial Intelligence (AI) in ecology.

To reach this objective, we will include the following tasks: acquiring the multispectral data; designing a deep network able to perform semantic segmentation with ultra-high spatial resolution; learning to unmix or disentangle multispectral images in order to distinguish different species in mixes of species; coupling optical and LiDAR information into a multi-modal, multi-task deep architecture; and coupling deep learning with physical models for biophysical parameters (i.e. plant traits) estimation.

Main researchers:

Sébastien Lefèvre, IRISA lab / OBELIX team, Full Professor at the University of South Brittany (UBS).

Thomas Corpetti, LETG lab / OBELIX team, Senior Scientist at CNRS.

Thomas Dewez, Scientist at BRGM.

Benjamin Pradel and Marie Deboisvilliers, Engineers at l’Avion Jaune.

Florent Guiotte, IRISA lab, Postdoc, developing novel AI methods for ultra-high resolution imagery, with the help of L’Avion Jaune company.

Hoàng-Ân Lê, IRISA lab, Postdoc, developing novel AI methods for processing 3D point clouds.

Main achievements:

We first demonstrated that existing methods for semantic segmentation or object detection, trained on general-purpose image datasets, are largely inadequate for mapping plants from sub-centimeter resolution images. While in remote sensing, semantic segmentation is the standard task for producing land cover maps, we observed that, in this case, object detection is more suitable, as the goal is to identify plants and their locations, rather than assigning a class to each pixel (on the order of a few millimeters).

We also validated the relevance of a data partitioning strategy in a semi-supervised framework, which facilitates the design of field measurement campaigns. To address the spatial noise present in the labels, we developed a label fuzzification approach (Figure, panel A), although the results remain insufficient for large-scale deployment.

We also developed several models (Figure, panel B), including a new semi-supervised approach, to estimate biophysical variables (plant height, volume and cover, biomass), and obtained very promising results by leveraging unlabelled images alongside a few annotated ones.

Finally, we designed a method combining 2D rasterization and generative adversarial models to learn how to produce a digital terrain model from a 3D point cloud.